“Sgt Wadleigh! Sgt Wadleigh!” It was around 4 am in the desert north of Kuwait City as I was woken by the nearby shouts of a couple squad members on watch. I jumped out of my sleeping bag and ran to their position. Landing next to them behind the rock formation, weapons ready, I immediately saw the “threat”.

The bright headlights beaming at us only 30 feet away, engine revving, I could see what appeared to be a Range Rover that had driven through/over the razor wire in front of our position. And with both hands out the window, the driver repeatedly said “No English!”.

We quickly assessed this was not a threat, motioned him away, and watched as he sped off into the desert night. Issue resolved, hearts settled, carry on.

So what does a military story have to do with the Cloud?

I’m a Marine veteran and also an AWS Cloud Engineer. In my time in tech since serving I have found many of the traits developed (still a wip) during my service have translated well.

- Handling a random late night driver or an on call production incident both require judgment and decisiveness.

- Simply showing up when called upon to meet the team needs requires dependability.

- Communicating effectively to reach desired outcomes requires tact and bearing.

- Being a good team member oftentimes requires unselfishness and even enthusiasm.

- And if you want to grow it requires initiative, knowledge and I would say integrity.

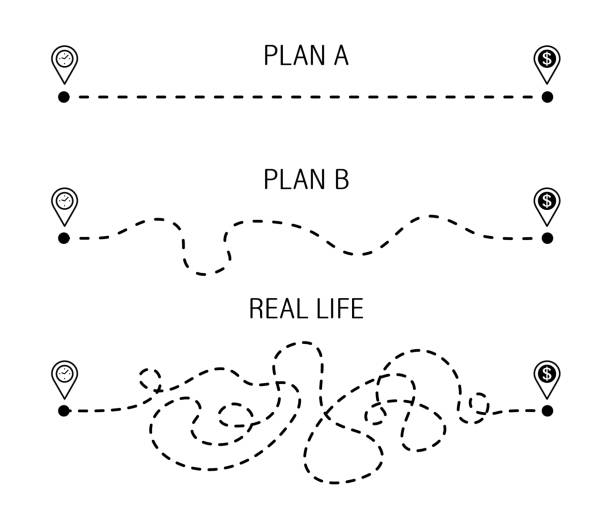

The Marine Corps motto is Semper Fidelis (Latin for “Always Faithful”). An adapted expression was Semper Gumby – “Always Flexible.” We had to be flexible to pivot as needed to accomplish any mission.

It’s with these last traits and this adapted expression that I’m writing now. I want to continue learning and growing/adapting technically and professionally. And to grow I have to be honest about where I’m at – my experiences and my gaps.

“The characteristics of a superior programmer have almost nothing to do with talent and everything to do with a commitment to personal development… The characteristics that matter most are humility, curiosity, intellectual honesty, creativity and discipline, and enlightened laziness.”

– Steve McConnell: Code Complete – Personal Character

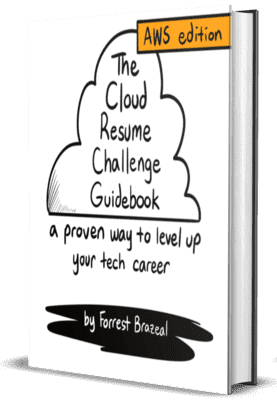

I aspire to be the caliber of engineer McConnell describes. I haven’t arrived, but I can head in the right direction. With this in mind I found The Cloud Resume Challenge by Forrest Brazeal.

A guidebook with project spec to host your resume in the Cloud using Serverless Computing while employing DevOps practices. Challenge accepted!

Note: In the Marines an Operations Order is formatted to organize and understand an operation using the acronym “SMEAC”. This post uses an “adapted” version to share my project.

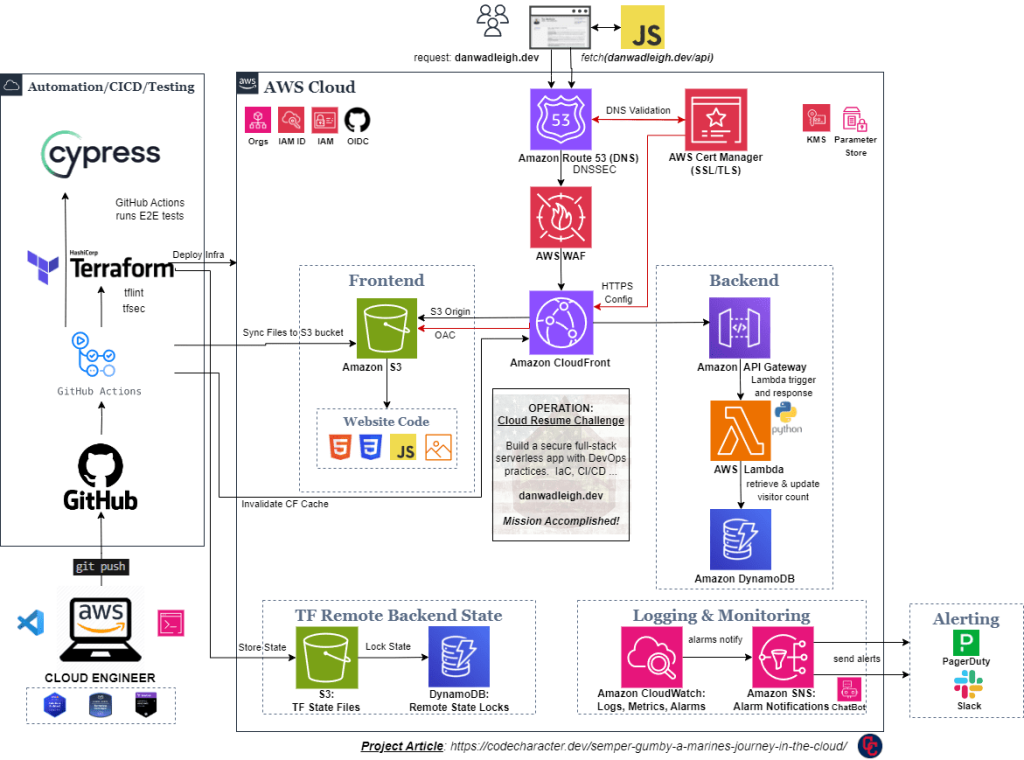

Operation: Cloud Resume Challenge

A little orientation to start. The challenge consists of building a full-stack serverless web app of your resume with a visitor counter in the cloud. And it comes with a strong recommendation to get a cloud certification along with several mod tracks to extend the project, including security and DevOps practices.

Now it was time to start the project and create this …

GitHub Repos: Frontend and Backend

S – SITUATION

I’ve been working with and supporting production apps in AWS for over 5 years now. In tech I’ve gone from Zero-to-Pro on the job as a Windows Admin, Linux Admin, and AWS Cloud Admin. I know I can learn quickly and produce.

But if I’m being honest, there are gaps I’d like to fill in my knowledge and experience. Even if that means wrestling with a little imposter syndrome on occasion. I’ve done it before, I will do it again. Serverless Computing (Development) and DevOps are at the top of my interest list.

I’ve learned in tech we have to be flexible to learn what the job requires. In the market we have to be flexible, and willing, to learn what’s needed if we want to grow. And I want to learn and grow.

M – MISSION

To upskill identified gap areas by building hands-on working projects that leverage the Cloud, Serverless Computing and DevOps practices, in order to prepare for future Cloud roles.

E – EXECUTION

Preparation

Time to ensure my local and cloud environments were ready.

- AWS Organizations and IAM Identity Center: Set up a new account for the project and configured the CLI.

- GitHub Repositories: Set up a development environment with VS Code and cloned the repos.

Time to step off!

Certifications

Fortunately I had already attained some relevant certifications:

- AWS Certified Solutions Architect Associate

- AWS Cloud Quest Serverless Developer

- Hashicorp Certified Terraform Associate

In all transparency I passed the AWS cert 5 years ago, but let it lapse … early 2024 target. And had some intermittent hands-on experience with several DevOps tools (Chef, Ansible, Jenkins, Terraform).

Creating the Frontend

With my environment ready to go it was time to start building.

- HTML & CSS

- I completed freeCodeCamp’s Responsive Web Design course a while back, but wanted to focus on cloud skills. So I found a minimalist static template and modified that.

- S3 Static Website

- Next deployed it online, managing bucket creation, versioning, and public access configs.

- CloudFront & ACM (HTTPS)

- Then created a CloudFront distribution for the S3 bucket to deliver content using OAC to restrict access while automating the SSL cert provisioning with Amazon Certificate Manager.

- Route 53 (DNS)

- Then set up a custom domain managing DNS with Route 53.

- Web Application Firewall (WAF)

- And lastly, AWS WAF to protect CloudFront (S3) and API Gateway against common exploits and bots.

This chunk went fairly smoothly as I had worked with all the services on the frontend in previous roles. Now to the backend learning.

Resume Site: https://DanWadleigh.dev/

Building the Backend

This was where things started to get really fun. The resume site needed a visitor counter that would fetch and update the count.

- DynamoDB

- I set up an Amazon DynamoDB table to store the counter. Simple enough.

- API Gateway (API)

- Now on to creating an API with API Gateway that would communicate with my resume site and update the visitor count in DynamoDB.

- Lambda (Python)

- I wrote a Lambda function in Python using the boto3 library and integrated it with the API Gateway to update the visitor count in DynamoDB.

I ran some “quick” tests in the console followed by Postman and we had an updating counter. It was calm before the storm. Or rather time to be flexible while learning. The operation was about to come under fire.

Integrating the Frontend & Backend

Now to just write a little JavaScript fetch script and glue the frontend and backend together. I just had to trigger the Lambda function from my static site and see the visitor count roll. Followed up with some testing. How hard could that be?

- JavaScript

- I had completed Jad Joubran’s Learn JavaScript course some time back. This code seemed simple enough.

- Cypress (Testing)

- And I had written some Python tests previously using Pytest for a take home exercise which very practically opened my eyes to testing. I wanted to try something new and found this process very informative and fulfilling.

Did anyone mention CORS issues were a pain? Talk about an enemy combatant trying to disrupt the operation. Which caused me to circle back on my API Gateway and Lambda function a few times.

Scouring the internet, and finding more resources than I could ever need. Then in a victorious moment, CORS waved the white flag and BOOM! … the counter displayed and updated on my resume site.

I followed that victory up with learning some Cypress and writing some tests to validate future deployments.

Automation and CI/CD

In this project, automation and CI/CD were listed as one of the last steps. But, I had written the Infrastructure as Code and GitHub Actions throughout the process.

- Infrastructure as Code (Terraform)

- Aside from the obvious automation benefits, I found the process really forced me to know exactly HOW and WHY things worked. No black box console config magic happening. If I didn’t codify things correctly, they weren’t provisioned and simply didn’t work. Running tfsec helped tighten things up as well.

- CI/CD (GitHub Actions)

- Oh, the glorious green checkmark of success after a git push to make your day. It’s like coming out of the desert and someone handing you a cookie. How did we ever do it differently?

- Note: when we flew out of Kuwait, it was Christmas day, and the helo crew chief handed us a tin of Christmas cookies. Best. cookie. ever!

A – ADMIN & LOGISTICS

In an effort to securely and reliably deploy updates I made a few administrative configuration changes.

- Terraform Remote Backend

- Configured a remote backend on AWS with S3 and DynamoDB to store state and handle locks.

- S3 Sync & CloudFront Cache Invalidation

- Updates to the pipeline to invalidate the CloudFront cache whenever the website code in S3 bucket is synchronized.

- OIDC Provider

- Updated the pipeline to create an IAM OIDC identity provider that trusts GitHub. Thus removing GitHub repository secrets.

C – COMMAND & SIGNAL

And last but not least, we have to know if our application is having issues. I enabled some monitoring and alerting.

- CloudWatch: logging, metrics and alarms.

- SNS: notifications for the alarms.

- PagerDuty: alerting.

- Slack: and more alerting integrating with Amazon Chatbot.

OPERATION DEBRIEF

Much like in strength training, the real results come from hard work repeated consistently over time. If it was easy, everyone would be fit and strong. But it’s not. It requires perseverance, at times some pain, and a little humility to know where you’re at so you can get where you want to be.

I learned a lot during this process. Sure I’d like to say it was easy because I have some “experience.” But in reality, I wrestled, failed, wrestled more, failed more, and eventually outlasted the competition.

The summary of “success” to me is confirming I can in fact learn quickly (enough) and Google well (enough). That and it was truly an opening to future operations. I plan to continue learning and go deeper with AWS, Serverless and DevOps.

Operation: Cloud Resume Challenge … Mission accomplished!